Home Server Take 2

So what went wrong?

Introduction

As some of you dear readers may recall, I had a functioning self-hosted home server which is supposed to be working perfectly. However, as alluded to earlier, it wasn't. To give a brief summary of the journey so far, I've managed to secure my hands on an old office PC which I transformed to become a self-hosted server. Utilising Proxmox to handle multiple Virtual Machine (VM) instances. In this configuration, there was one VM dedicated to TrueNAS, "the World's #1 Open Source Storage", which was used for a RAID configuration as well as a NextCloud instance (self hosted cloud storage); with the other VM dedicated to a Ubuntu server hosting Jellyfin, the remote media playback tool.

So what went wrong?

Not long after setup, it was clear that there was a handful of edge case issues that needed to be solved. Immediately, I found out about docker and realised why majority of people use that compared to the voodoo magic I chose to do. Docker is essentially

an OS which uses OS level virtualisation to create mini VMs for each piece of software. It is mainly built for developers and is massively easier to execute and get working compared to my solution. However, just because we found an easier

method doesn't mean we have to go for it.

Back on topic of why things went wrong, firstly, Nextcloud cannot be configured so that the storage location of the data is on the SMB share which can be accessed externally;

something I hoped to accomplish so that I can import media into the Jellyfin instance remotely. Due to this issue (and me accidentally breaking the entire server), I've decided to go from scratch and start again.

Design changes

Generally speaking, the hardware in the system is fine; however, upgrading the CPU wouldn't hurt so the system has been upgraded to a (used) i7-7700. Apart from that, there were no other real hardware changes to the system. Additionally, the RAM capacity was upgraded from 16GB to 32GB; despite this, it shouldn't make a major difference unless the server was used by multiple users at the same time.

Connection issues

For a few months, there seemed to be an issue as connecting to the server was just not possible remotely. Unfortunatly, I was not present in the same building as where the server was located, meaning I had no way to verify if I could connect via the internal IP. This one setback resulted in the setback due to a unique particular issue. It couldn't be that the public IP address of the place had changed as I had connection to the network. Turns out due to the power settings set in the internal VM running Ubuntu, the OS handling Tailscale, shut the VM off after some time of inactivity. Resulting in the need for an in person intervention to fix the issue at hand.

After this mishap, another difficulty arose, the external web URLs connected to the internal IP and ports for internal sites (e.g., Jellyfin) stopped working. After some discovery, the determining reason was down to the fact that my ISP set my router to a dynamic IP address. Finding the issue is one thing, dealing with it is another. Setting your IP to be static instead of dynamic isn't challenging, instead, is is very risky from a cybersecurity point of view.

Cybersecurity

What is a dynamic IP address? A dynamic address works by changing your public IP periodically, down to the ISP discretion. This is mainly done via DHCP, or Dynamic Host Configuration Protocol, which automatically provides devices onto a network their own IP and subnets. So why do they exist? Short answer, it's cheaper. Essentially, ISP's can lease out a specific IP address to a household for a temporary length of time and let it automatically keep changing. It also allows for (technically) unlimited IP addresses to be assigned; whereas if a static IP was to be used, each address would need to be purchased. It also boosts security as 3rd party connections will have to go through a medium to connect to the address. Despite all of this, it is still possible to set my public IP to be permanent, or even bind it to a dynamic DNS which would help counteract this. However, I personally do not want to jeprodise the cyber safety of my household over a project.

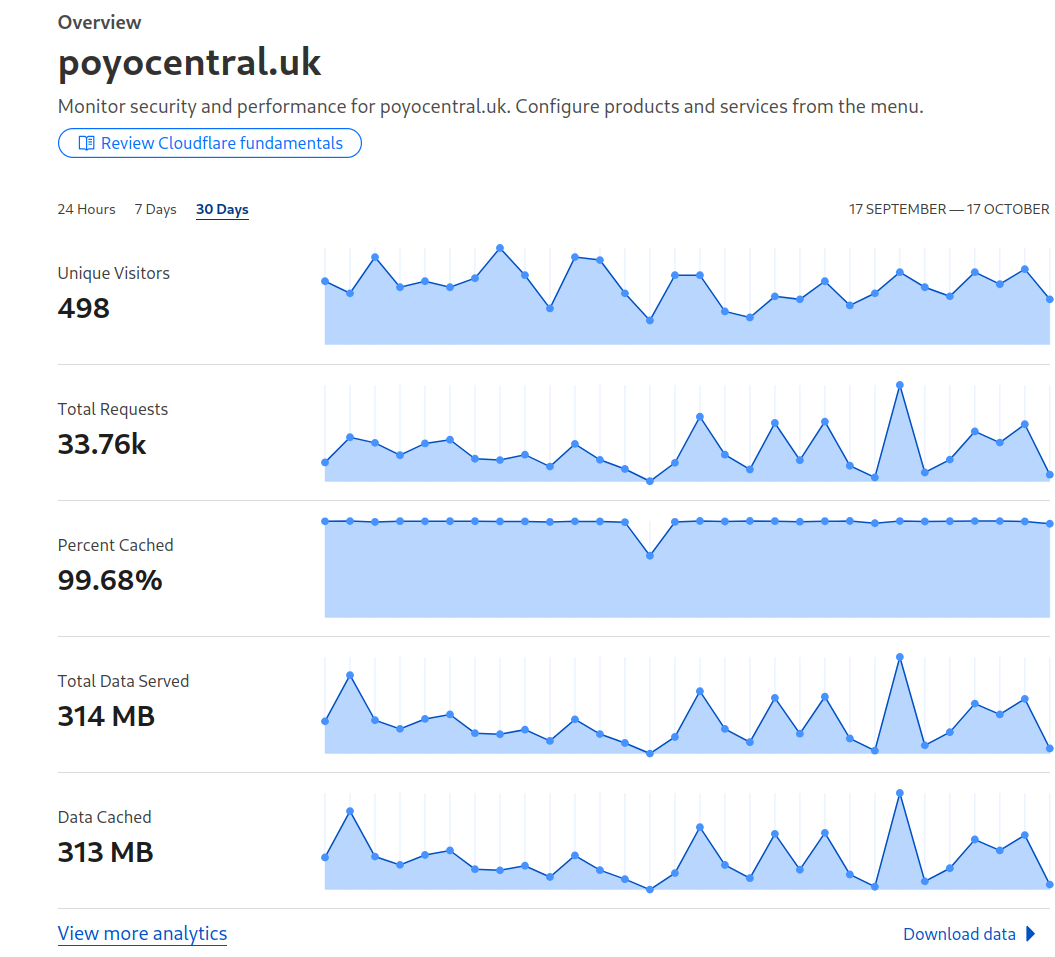

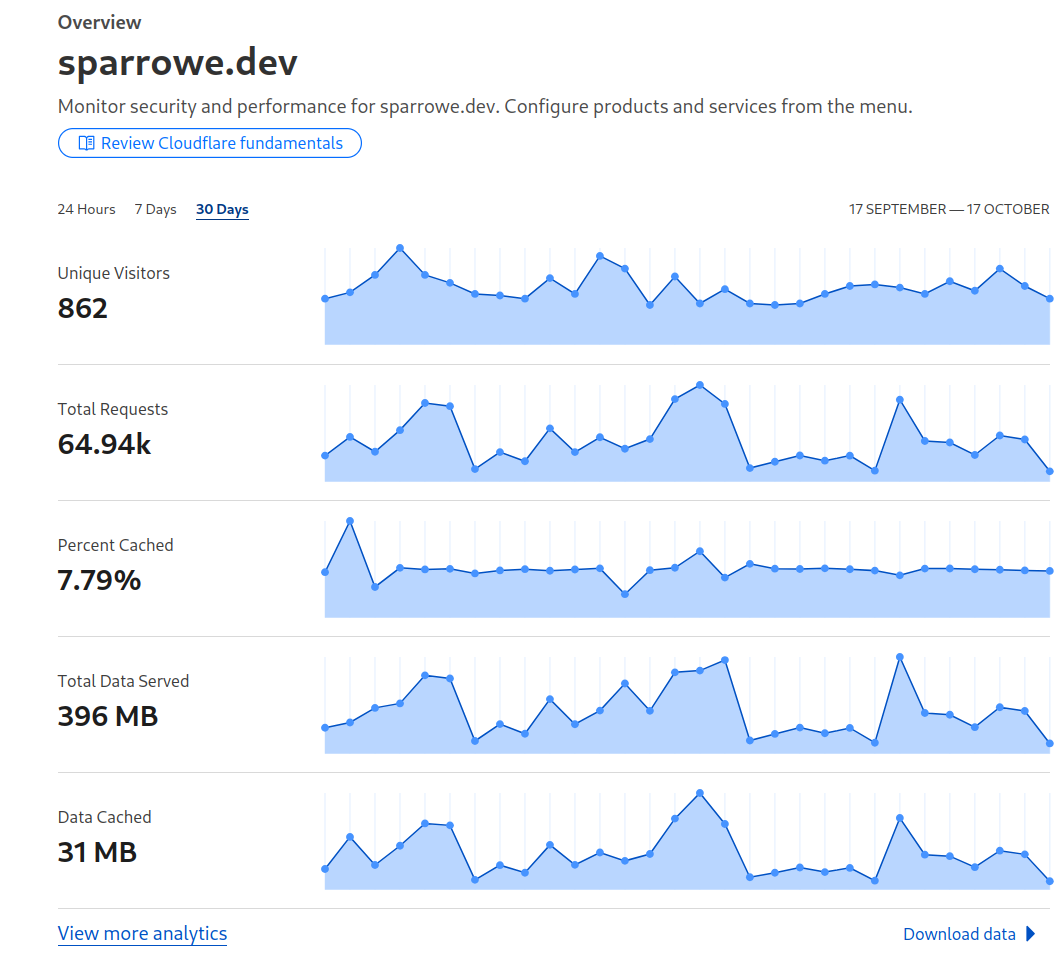

Another cybersecurity risk was directly setting the IP address to a domain via reverse proxy, despite the ease of use of reverse proxy, it means that anyone can try and infiltrate the home network including devices that are not listed in the reverse proxy. Additionally, for some unknown reason, within the first 24 hours of having a reverse proxy setup (mind you without any actual data on the sites), there was a good amount of suspicious visits. Provided is an image of an overview of the last 30 days (where the site was empty); within the data, there were 470 malicious attacks stopped by Cloudflare in the last 30 days alone.

Compared to this site, despite the near 60% increase in visitors, there were only 57 blocked attackers. The only difference between the two is how they are hosted (this site is ran off GitHub pages), which suggests that reverse_proxy does in fact make a site more targetable.

Reflection

Despite all of this effort, nothing that had been stated suggests that this project is impossible, it is just a lot of work. Work that does not pay off at all, with a system that keeps resetting over time or breaks down for no apparent reason. Setting up a home server is the easy part, keeping it running is not. Midway though one of the diagnostic steps I paused and thought, why? Why do all this work for a solution that doesn't solve any existing issue. With that, the home server saga ends.